Site crawling is an important aspect of SEO and if bots can’t crawl your site effectively, you will notice many important pages are not indexed in Google or other search engines.

A site with proper navigation helps in deep crawling and indexing of your site. Especially, for a news site it’s important that Search engine bots should be indexing your site within minutes of publishing and that will happen when bots can crawl site ASAP you publish something.

- How to index a website in 24 hrs in Google search (Case study)

There are many things which we can do to increase the effective site crawl rate and get faster indexing. Search engines use spiders and bots to crawl your website for indexing and ranking.

Your site can only be included in search engine results pages (SERP’s) if it is in the search engine’s index. Otherwise, customers will have to type in your URL to get to your site. Hence you must have a good and proper crawling rate of your website or blog to succeed.

Here I’m sharing most effective ways to increase site crawl rate and increase visibility in popular search engines.

Simple and Effective Tips to Increase Site Crawl Rate

As I mentioned, you could do many things to help search engine bots find your site and crawl them. Before I get into the technical aspect of crawling, in simple words: Search engine bots follow links to crawl a new link and one easy way to get search engine bots index quickly, get your site link on popular sites by commenting, guest posting.

If not, there are many other things we can do from our end like Site pinging, Sitemap submission and controlling crawling rate by using Robots.txt. I will be talking about few of these methods which will help you to increase Google crawl rate and get bots crawl your site faster and better way.

- Read: Why your blog posts are not getting crawled by bots

1. Update your site Content Regularly

Content is by far the most important criteria for search engines. Sites that update their content on a regular basis are more likely to get crawled more frequently. You can provide fresh content through a blog that is on your site.

This is simpler than trying to add web pages or constantly changing your page content. Static sites are crawled less often than those that provide new content.

- How to maintain blog post frequency

Many sites provide daily content updates. Blogs are the easiest and most affordable way to produce new content on a regular basis. But you can also add new videos or audio streams to your site. It is recommended that you provide fresh content at least three times each week to improve your crawl rate.

Here is a little dirty trick for static sites, you can add a Twitter search widget or your Twitter profile status widget if it’s very effective. This way, at least a part of your site is constantly updating and will be helpful.

2 . Server with Good Uptime

Host your blog on a reliable server with good uptime. Nobody wants Google bots to visit their blog during downtime. In fact, if your site is down for long, Google crawlers will set their crawling rate accordingly and you will find it harder to get your new content indexed faster.

There are many Good hosting sites that offers 99%+ uptime and you can look at them at suggested WebHosting page.

3. Create Sitemaps

Sitemap submission is one of the first few things which you can do to make your site discover fast by search engine bots. In WordPress you can use Google XML sitemap plugin to generate dynamic sitemap and submit it to Webmaster tool.

- How to submit sitemap to Google Search engine

4. Avoid Duplicate Content

Copied content decreases crawl rates. Search engines can easily pick up on duplicate content. This can result in less of your site being crawled. It can also result in the search engine banning your site or lowering your ranking.

You should provide fresh and relevant content. Content can be anything from blog postings to videos. There are many ways to optimize your content for search engines.

Using those tactics can also improve your crawl rate. It is a good idea to verify you have no duplicate content on your site. Duplicate content can be between pages or between websites. There are free content duplication resources available online you can use to authenticate your site content.

5. Reduce your site Loading Time

Mind your page load time, Note that the crawl works on a budget– if it spends too much time crawling your huge images or PDFs, there will be no time left to visit your other pages.

- 7 quick tips to speed up Website load time

- How to reduce blog load time

6. Block access to unwanted page via Robots.txt

There is no point letting search engine bots crawling useless pages like admin pages, back-end folders as we don’t index them in Google and so there is no point letting them crawl such part of site.

A Simple editing on Robots.txt will help you to stop bots from crawling such useless part of your site. You can learn more about Robots.txt for WordPress below:

- Optimize WordPress robots.txt file for SEO

- Use Robots.txt to protect site from duplicate content

- Controlling crawl index using Robots.txt

7. Monitor and Optimize Google Crawl Rate

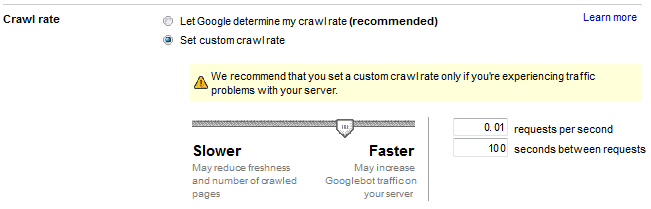

Now You can also monitor and optimize Google Crawl rate using Google Webmaster Tools. Just go to the crawl stats there and analyze. You can manually set your Google crawl rate and increase it to faster as given below. Though I would suggest use it with caution and use it only when you are actually facing issues with bots not crawling your site effectively.

You can read more about changing Google crawl rate here.

8. Use Ping services:

Pinging is a great way to show your site presence and let bots know when your site content is updated. There are many manual ping services like

Pingomatic and in WordPress you can manually add more ping services to ping many search engine bots. You can find such a list at WordPress ping list post.

9. Interlink your blog pages like a pro:

Interlinking not only helps you to pass link juice but also help search engine bots to crawl deep pages of your site. When you write a new post, go back to related old posts and add a link to your new post there.

This will no directly help in increasing Google crawl rate but will help bots to effectively crawl deep pages on your site.

- Quickly interlink your blog post with Insights plugin

- SEO Smart link plugin: Auto add Internal link

10. Don’t forget to Optimize Images

Crawlers are unable to read images directly. If you use images, be sure to use alt tags to provide a description that search engines can index. Images are included in search results but only if they are properly optimized. You an learn about Image Optimization for SEO here, and you should also consider installing Google image sitemap plugin and submit it to Google.

This will help bots to find all your images, and you can expect a decent amount of traffic from search engine bots if you have taken care of image alt tag properly.

Well, these are few tips that I can think of which will help you to increase site crawl rate and get better indexing in Google or other search engines. The last tip which I would like to add here is, add your sitemap link in the footer of your site.

This will help bots to find your sitemap page quickly, and they can crawl and index deep pages of your site from the sitemap.

Do let us know if you are following any other method to increase Google crawl rate of your site? If you find this post useful, don’t forget to tweet and share it on Facebook.

As I mentioned, you could do many things to help search engine bots find your site and crawl them. Before I get into the technical aspect of crawling, in simple words: Search engine bots follow links to crawl a new link and one easy way to get search engine bots index quickly, get your site link on popular sites by commenting, guest posting.

As I mentioned, you could do many things to help search engine bots find your site and crawl them. Before I get into the technical aspect of crawling, in simple words: Search engine bots follow links to crawl a new link and one easy way to get search engine bots index quickly, get your site link on popular sites by commenting, guest posting.

Well explained. Thank You for sharing.

ReplyDelete